Asynchronous computing addresses a need that has been at the forefront of application development since the earliest days of computing: ensuring fast application response between systems. Whereas synchronous architectures require a caller to wait for a response from a called function before moving on with the program flow, asynchronicity allows an external function or process to work independently of the caller.

The caller makes a request to execute work, and the external function or process “gets back” to the caller with the result when the work is completed. In the meantime, the caller moves on. The benefit is that the caller is not bound to the application while work is being performed. The risk of blocking behavior is minimal.

Reducing Wait Time with Multithreading

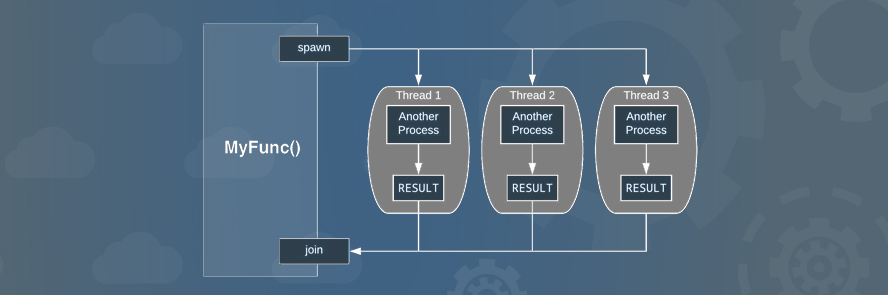

One common way to implement asynchronicity internally in an application is to use multithreading (see figure 1).

Figure 1: Using multithreading is a way to implement asynchronicity within a standalone application

In a multithreaded environment, a function spawns independent threads that perform work concurrently. One thread does not hold up the work of another. Then, when work is complete, the results are collected together and passed back to the calling function for further processing.

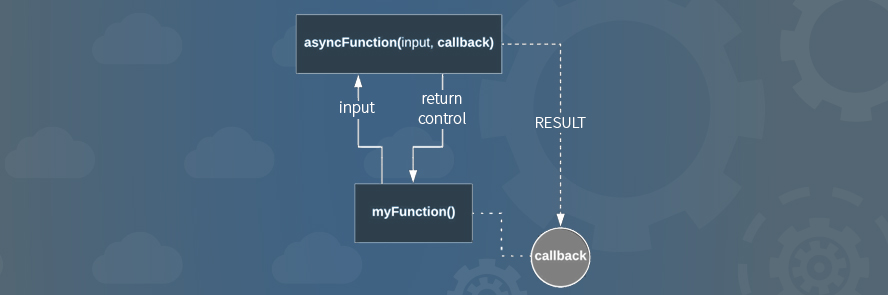

An event-driven programming language such as NodeJS implements asynchronicity using callback functions. In the callback model, a caller to a function passes a reference to another function — the callback function— that gets invoked when work is completed.

Figure 2: Using a callback allows a function to work independently of the caller

The benefit of using callbacks to implement an asynchronous relation between calling function and called function is that it allows the overall application to work at maximum efficiency. No single process is holding up another process from doing work.

The callback pattern is useful for implementing an asynchronous interaction among distributed systems, particularly web-based systems using HTTP. HTTP is based on the request-response pattern. Typically, a caller makes a request for information to a server on the internet. The server takes the request, does some work, and then provides a response with the result. The interaction is synchronous. The calling system — a web page, for example — is bound to the server until the response is received.

When the response takes a few milliseconds to execute, there is little impact on the caller. But when the server takes a long time to respond, problems can arise. However, there are justifiable reasons for having a request that takes a long time to process.

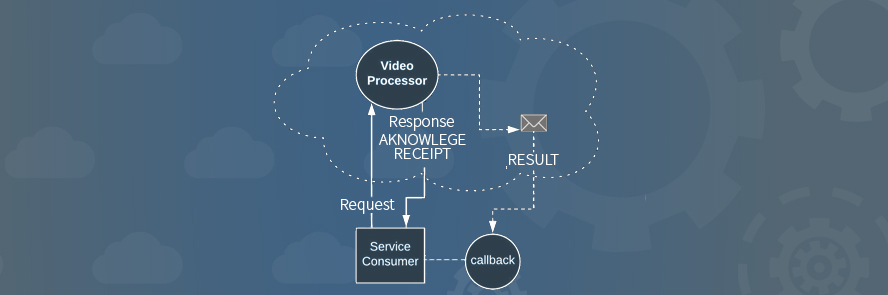

For example, imagine a fictitious service that is able to determine the number of times a particular person smiles in a given YouTube video. The caller passes the URL of the YouTube video to the service. The service gets the video from YouTube, as defined by the submitted URL. Then, the process does the processing.

One video might have a one-minute runtime, and another might have a 30-minute runtime. Either runtime is acceptable to the service looking for smiles. However, a caller using the service does not want to stay connected for the duration of the 30-minute analysis. Rather, the better way to do things is to make it so the video analysis service responds quickly with an acknowledgment of receipt. Then, when work is completed, the analysis service sends the results back to the caller at a callback URL. (Figure 3 below shows an illustration of this example.)

Figure 3: Web-based applications can use the callback pattern to implement an asynchronous system architecture

Asynchronous services are useful. However, they do make performance testing more difficult.

Measuring the performance of a synchronous service typically happens in a sequential manner: You define the virtual users that will execute the given test script. The test runs, requests are made and the results from responses are gathered. One step leads to another. But when it comes to asynchronous testing, one step does not lead to another. One step might start an asynchronous process that finishes well after the test is run.

The risk when testing asynchronous applications is that the process becomes independent of its caller. When the called process is on an external machine or in another web domain, its behavior becomes hidden. The performance of the called process can no longer be accurately tested within the scope of the request-response exchange. Other ways of observing performance need to be implemented.

Join 34,000 subscribers and receive carefully researched and popular article on software testing and QA. Top resources on becoming a better tester, learning new tools and building a team.

Leveraging Event Information in Logs

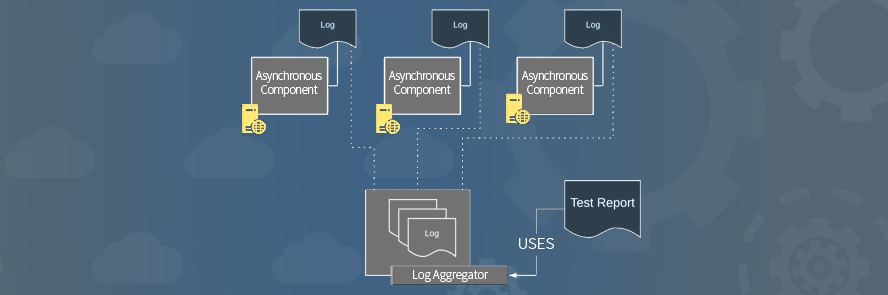

One of the easiest ways to observe performance in a distributed asynchronous application is to leverage the power of logs. Logs provide the glue that binds separate systems together. Depending on the logging mechanism in force, logs can capture function timespan, memory and CPU capacity utilization, as well as network throughput, to name a few metrics.

Once a system is configured so that every component of an asynchronous application is logging information in a consistent, identifiable manner, the remaining work to be done is to aggregate the log information in order to create a unified picture of performance activity overall (see figure 4).

Figure 4: Aggregating logs from asynchronous components provides information useful when reporting application performance

Using a unique correlation ID that gets passed among all components that are part of an asynchronous transaction provides a reliable mechanism by which to group various log entries for subsequent analysis. (You can read the details of working with correlation IDs here.)

The important thing to remember is that in an asynchronous application, any execution path can use a variety of processes that work independently of one another. Application flow can just hop about in a nonlinear manner. Thus, the way to tie all relevant performance metrics together is to aggregate log data from the various systems that make up the application.

Using an Application Performance Monitor

Application performance monitors (APMs) are fast becoming a standard part of most deployment environments. An APM is an agent that gets installed on a machine — either virtual or pure hardware — as part of the provisioning process. APMs observe machine behavior at a very low level. For example, most APMs will report CPU utilization, memory allocation and consumption, network I/O and disk activity. The information that an APM provides is very useful when determining application performance during a test.

Figure 5: System monitors provide low-level information that gives an added dimension to performance testing

Information provided by APMs is particularly useful when it comes to performance testing asynchronous applications. Much in the same way that log information from independent components can be tied together to provide a fuller understanding of performance test behavior overall, so too can information that gets emitted from an APM.

A bottleneck occurring in one system might not be readily apparent in the initial request-response that started the asynchronous interaction, and a fast request-response time can be misleading. More information is needed. APM data from all the systems used by an application will reveal actual performance shortcomings, even if poor performance might not be evident when measuring the initial request and response that started the interaction with the application.

Putting It All Together

Performance testing asynchronous applications is difficult, but it’s made easier if proper test planning takes place. Testing asynchronous applications require more than measuring the timespan between the call to an application and the response received back. Asynchronous applications work independently of the caller, so the means by which to observe behavior throughout the application must be identified before any testing takes place.

A good test plan will incorporate information from machine logs and application performance monitors to construct an accurate picture of the overall performance of the application. Should log and APM information not be available, then test personnel will do well to work with DevOps personnel to make the required information available.

Being able to aggregate all the relevant information from every part of a given asynchronous application is critical for ensuring accurate performance testing. A performance test is only as good as the information it consumes and the information it produces.