This is a guest posting by Bob Reselman

The digital infrastructure of the modern IT enterprise is a complex place. The days of one server attached to a router at a dedicated IP that’s protected by a hand-configured firewall are gone.

Today, we live in a world of virtualization and ephemeral computing. Computing infrastructure expands and contracts automatically to satisfy the demands of the moment. IP addresses come and go. Security policies change by the minute. Any service can be anywhere at any time. New forms of automation are required to support an enterprise that’s growing beyond the capabilities of human management. Once such technology that is appearing on the digital landscape is the service mesh.

The service mesh is expanding automation’s capabilities in terms of environment discovery, operation and maintenance. Not only is the service mesh affecting how services get deployed into a company’s digital environment, but the technology is also going to play a larger role in system reliability and performance, so those concerned with performance and reliability testing are going to need to have an operational grasp of how the service mesh works, particularly when it comes to routing and retries.

As the service mesh becomes more prevalent as the standard control plane, performance test engineers will need to be familiar with the technology when creating test plans that accommodate architectures that use a service mesh.

Get TestRail FREE for 30 days!

The case for the service mesh

The service mesh solves two fundamental problems in modern distributed computing: finding the location of a service within the technology stack, and defining how to accommodate service failure.

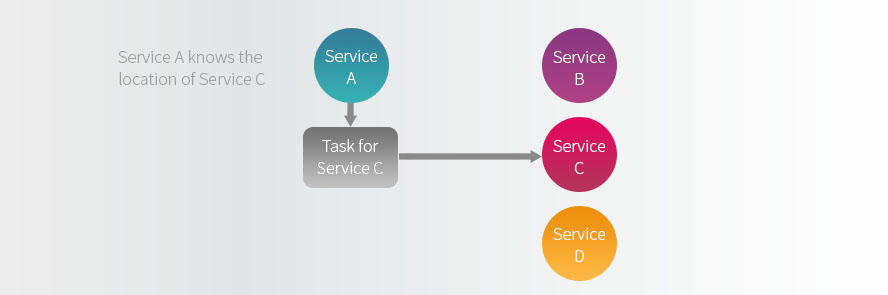

Before the service mesh came along, each service needed to know the location of service upon which it depended. For example, as shown below in figure 1, in order for Service A to be able to pass work to Service C, it need to know the exact location of Service C. The location might be defined as an IP address or as a DNS name. Should the location of the dependent service change, at best, a configuration setting might need to be altered; at worst, the entire consuming service might need to be rewritten.

Figure 1: Before the service mesh, a service needed to know the exact location of other services it uses

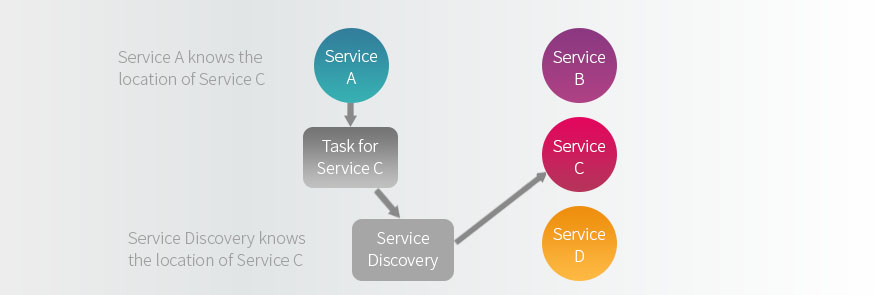

Tight coupling between services proved to be brittle and hard to scale, so companies started to use service discovery technologies such as ZooKeeper, Consul and Etcd, which alleviated the need for services to have knowledge of the location of other services upon which they depended. (See figure 2.)

Figure 2: Service discovery has complete knowledge of all services in the system and routes work between services

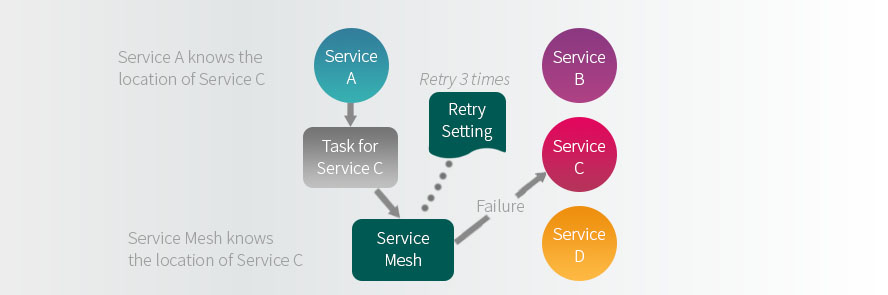

However, one of the problems that was still outstanding was what a service does when one of the dependencies fails. Should the service just error out? Should it retry? If it should retry, how many times? This is where the service mesh comes in.

The service mesh combines, among other things, service discovery and failure policy. In other words, not only will the service mesh allow services to interact with one another, it will also execute retries, redirection or aborts, based on a policy configuration. (See figure 3.)

Figure 3: The service mesh can be configured to retry a service in the event of failure

The service mesh is the control plane that routes traffic between services and provides fail-safe mechanisms for services. In addition, a service mesh logs all activity in its purview, thus providing fine-grain insight into overall system performance. This type of logging makes distributed tracing possible, which makes monitoring and troubleshooting activities among all services in the system a lot easier, no matter their location.

The more popular technologies on the service mesh landscape are Linkerd, Envoy and Istio.

What’s important to understand about the service mesh from a performance testing perspective is that the technology has a direct effect on system performance. Consequently, test engineers should have at least a working knowledge of the whys and hows of service mesh technologies. Test engineers also will derive a good deal of benefit from integrating the data that the service mesh generates into test planning and reporting.

Join 34,000 subscribers and receive carefully researched and popular article on software testing and QA. Top resources on becoming a better tester, learning new tools and building a team.

Accommodating the service mesh in performance test planning

How might performance test engineers take advantage of what the service mesh has to offer? It depends on the scope of performance testing and interest of the test engineer. If the engineer is concerned with nothing more than response time between the web client and web server, understanding the nature and use of a service mesh has limited value. However, if the scope of testing goes to lower levels of any application’s performance on the server side, then things get interesting.

The first, most telling benefit is that a service mesh supports distributed tracing. This means that the service mesh makes it possible to observe the time it takes for all services in a distributed architecture to execute, so test engineers can identify performance bottlenecks with greater accuracy. Then, once a bottleneck is identified, test engineers can correlate tracing data with configuration settings to get a clearer understanding of the nature of performance problems.

In addition to becoming an information resource, the service mesh becomes a point of interest in testing itself. Remember, service mesh configuration will have have a direct impact on system performance, and such an impact adds a new dimension to performance testing. Just as application logic needs to be performance tested, so too will service mesh activity. This is particularly important when it comes to testing auto-retries, request deadline settings and circuit-breaking configuration.

In terms of a service mesh, what are auto-retries, a request deadline and circuit breaking?

An auto-retry is a setting in service mesh configuration that makes it so that a consuming service will retry a dependent service when it returns a certain type of error code. For example, should Service A call Service B, and Service B returns a 502 error (bad gateway), Service A will automatically retry the call for a predefined number of times. 502 errors can be short-term, thus making a retry a reasonable action.

A request deadline is similar to a timeout. A request is allowed a certain period of time to execute against a called service. If the deadline is reached, regardless of retry setting, the request will fail, preventing an undue load burden from being placed on the called service.

Circuit breaking is a way to prevent cascading failure, when one point in the system — a service, for example — fails and causes failure among other points. A circuit breaker is a mechanism that is “wrapped” around a service so that if the service is in a failure state, a circuit breaker “trips.” Calls to the failing service are rejected as errors immediately, without having to incur the overhead of routing to and invoking the service. Also, a service mesh circuit breaker will record attempted calls to the failed service and alert monitors observing service mesh activity that the circuit breaker has been “tripped.”

As service mesh becomes part of the enterprise system architecture, performance test engineers will do well to make service mesh testing part of the overall performance testing plan.

Putting it all together

The days of old-school performance testing are coming to a close. Modern applications are just too complex to rely on measuring request and response times between client and server alone. There are too many moving parts. Enterprise architects understand the need to implement technologies that allow for dynamic provisioning and operations without sacrificing the ability to observe and manage systems, regardless of size and rate of change.

As the spirit of DevOps continues to permeate the IT culture, the service mesh is becoming a key component of the modern distributed enterprise. Having a clear understanding of the value and use of service mesh technologies will allow test engineers to add a new dimension to performance test process and planning, and performance testers who are well-versed in the technology will ensure that the service mesh is used to optimum benefit.

Article by Bob Reselman; nationally-known software developer, system architect, industry analyst and technical writer/journalist. Bob has written many books on computer programming and dozens of articles about topics related to software development technologies and techniques, as well as the culture of software development. Bob is a former Principal Consultant for Cap Gemini and Platform Architect for the computer manufacturer, Gateway. Bob lives in Los Angeles. In addition to his software development and testing activities, Bob is in the process of writing a book about the impact of automation on human employment. He lives in Los Angeles and can be reached on LinkedIn at www.linkedin.com/in/bobreselman.

Test Automation – Anywhere, Anytime