This is a guest posting by Jim Holmes

Exploratory testing is at its best, in my opinion, when it’s guided by an understanding of current risks in the system you’re testing. I’m quite a fan of Lean principles and the notion of waste strikes a powerful chord with me. As such, I’m a huge fan of understanding what the current automated testing in a system is so that I can better know where to focus my efforts for exploratory testing.

In this effort, one handy tool I’ve used for many years has been code coverage metrics. Code coverage looks at specific portions of the codebase exercised by automated tests and shows which portions of the codebase are covered, or aren’t. Coverage indications are normally broken out by major pieces (think JAR or DLL files), then into components within those areas (classes, methods, etc.)

These metrics are normally generated as part of a build pipeline by running tools such as SonarQube, NCover, etc. Code coverage can also be generated locally on a developer/tester’s system with various tools including Visual Studio, the aforementioned NCover, and others.

Code coverage information can be generated from different types of automated tests based on the particular toolset being used for testing and measurement. Every code coverage tool works with unit tests. Most work with integration/service/API tests, and many can be configured to support coverage for automated functional tests at the User Interface (UI) level–think of coverage generated for your WebDriver UI tests.

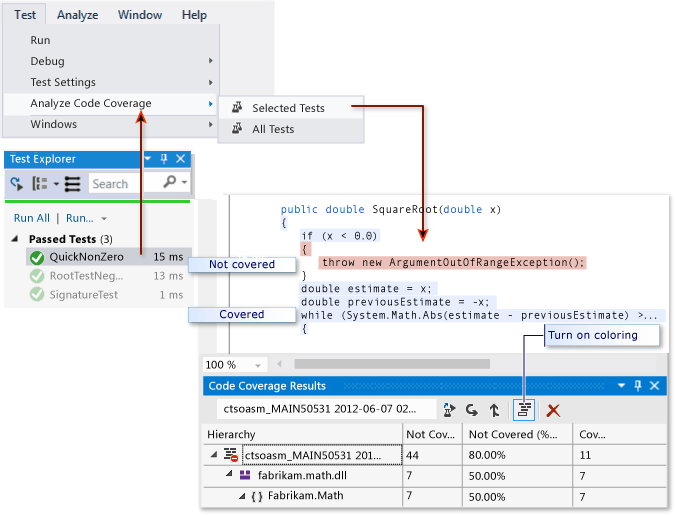

For example, this graphic from Microsoft’s Visual Studio documentation explicitly shows a block of code within a Math component. Part of the SquareRoot method is covered by tests, but part is uncovered.

Code Coverage in Visual Studio 2017. Source: Microsoft

Code coverage can be a horrible, badly misused metric. It gives give zero indication whether the tests covering that block are actually **GOOD** tests. A block of code could conceivably be 100% covered by an automated test that simply invoked that block yet did zero effective assertions that the block worked as expected!

With that caveat in mind, code coverage can still be a great help guiding testers to risky, interesting parts of the system. Code coverage can also help testers avoid sections of the system which are well-covered with tests.

I look to code coverage in two ways: first, the technical aspect as mentioned above. I can look to build reports or metrics gathered in my local development environment. Secondly, and to me even more importantly, I spend time collaborating with the team to understand HOW things are tested.

The technical aspects of code coverage inform me of whether I need to look at specific data to use or avoid if it’s already covered. Have all boundary conditions been covered with automated tests? Great! I don’t need to look at any of that for my exploratory sessions. Is a particular area of the codebase particularly low in coverage? Perhaps that area is low-value simplistic data container objects which really don’t need coverage. (If you’re interested, look up and read a bit about POCOs, POJOs, or DTOs.)

The collaborative aspects of code coverage inform me of the more interesting, difficult areas I might need to look at. These might include system interactions, edge-cases, or testing approaches not understood by the rest of the team.

Let me use a somewhat simplified example from a real-world issue I faced with a client team several years ago. I was brought on to help the testers learn WebDriver. (Hint: It’s never about just learning to use WebDriver, it’s about learning good testing first, then applying WebDriver and other tools on top of your good testing practices…)

The team gave me a list of 60 tests to automate checks on a UI grid which was responsible for computing production line configuration information. The grid had been highly customized in Javascript with formulas to calculate the output configuration data. The checks I was being asked to automate were all around validating computation of the configuration data.

At that time, the team was undergoing a massive uplift in skills and practices, so I wasn’t surprised that the testers hadn’t thought to see what developer-level unit tests might be in place. We looked at build reports from SonarQube to see what test coverage looked like. Test coverage metrics looked very good for that particular part of the system, but the testers weren’t yet at the point where they could understand the coverage data they were looking at.

This gave us an opportunity to talk directly with the developers regarding the coverage and led to the testers gaining confidence in the current automated test coverage. Instead of writing 60 UI-level tests to confirm calculations I was able to write a handful validating functionality.

More importantly, and back to the exploratory nature of this article, we found that the developers had written fairly simplistic tests. We were seeing 100% code coverage from the tests; however, the particular functionality relied on using multiple parameters to compute configuration data. The developers were unaware of risks around bugs from multi-parameter work, and how to mitigate those risks via combinatorial or pairwise testing.

As a result, the testers and I spent time creating a number of pairwise scenarios to use while running exploratory testing sessions. In addition to gaining information about the state of the system when working with complex interactions, we were able to provide some of those test scenarios for the developers to improve their automated testing coverage.

Exploratory testing is the best use of good testers’ time and skill. Understanding code coverage is a terrific way to help boost the value of exploratory sessions.

Jim is an Executive Consultant at Pillar Technology where he works with organizations trying to improve their software delivery process. He’s also the owner/principal of Guidepost Systems which lets him engage directly with struggling organizations. He has been in various corners of the IT world since joining the US Air Force in 1982. He’s spent time in LAN/WAN and server management roles in addition to many years helping teams and customers deliver great systems. Jim has worked with organizations ranging from start-ups to Fortune 10 companies to improve their delivery processes and ship better value to their customers. When not at work you might find Jim in the kitchen with a glass of wine, playing Xbox, hiking with his family, or banished to the garage while trying to practice his guitar…..