This is a guest post by Jim Holmes.

Years ago Mike Cohn coined the phrase Test Automation Pyramid to describe how teams should view mixing the various types of automated testing. Having a solid grasp of working with different automated test types is critical to help you keep your overall test automation suite lean, low-maintenance, and high-value.

Automated Test Types

While there are many types of automated tests, I’m focusing this article on three specific kinds:

- Unit Tests: Tests which focus on a specific part of one method or class, such as exercising boundaries in an algorithm, or specific cases for validations. Unit tests never cross service boundaries. As such, they’re generally short, concise, and blisteringly fast.

- Integration/Service/API Tests: These tests explicitly hit flows across service boundaries. As such, they invoke web services and/or calls to the database. Because of these cross-service boundaries, they’re generally much slower than unit tests. Integration tests should never spin up or utilize the user interface.

- Functional/User Interface Tests: UI tests drive the application’s front end. They either spawn the application itself (desktop, mobile, etc.) or launch a browser and navigate the web site’s pages. These tests focus on user actions and workflows.

Understanding a Good Mix

I love Mike Cohn’s Test Pyramid as a metaphor reminding me that different aspects of the system are best covered by different types of tests. Some people take exception in its makeup, as they don’t like the notion of being forced to a certain ratio of tests; however, I view the Pyramid as a good starting point for a conversation about how tests for the system at hand should be automated.

For example, validating a computation for multiple input values should NOT be handled via numerous UI tests. Instead, that’s best handled by unit tests. If the computation is run server-side, then a tool like NUnit might be used to write and run the tests. If those computations are in the browser as part of some custom Javascript code, then a tool such as Jasmine or Mocha could handle those.

Integration tests, also oft-referred to as service or API tests, should handle validations of major system operations. This might include basic CRUD (Create, Retrieve, Update, Delete) actions, checking proper security of web service endpoints, or proper error handling when incorrect inputs are sent to a service call. It’s common for these tests to run in the same framework/toolset as unit tests–NUnit, JUnit, etc. Frequently, additional frameworks are leveraged to help handle some of the ceremonies around invoking web services, authentication, etc.

User Interface testing should validate major user workflows and should, wherever possible, avoid testing functionality best handled at the unit or integration test level. User interface automation can be slow to write, slow to run, and the most brittle of the three types I discuss here.

With all this in mind, the notion of the Test Automation Pyramid helps us visualize an approach: Unit tests, the base of the pyramid, should generally make up the largest part of the mix. Integration tests form the middle of the pyramid and should be quite fewer in number than unit tests. The top of the pyramid should be a relatively limited number of carefully chosen UI tests.

It’s important to emphasize that there is NO ACROSS-THE-BOARD IDEAL MIX of tests. Some projects may end up with 70% unit tests, 25% integration, and 5% UI tests. Other projects will have completely different mixes. The critical thing to understand is that the test mix is arrived at thoughtfully and driven by the team’s needs — not driven by some bogus “best practice” metric.

It’s also critical to emphasize the overall mix of test types evolves as the project completes features. Some features may require more unit tests than UI tests. Other features may require a higher mix of UI tests and very few integration tests. Good test coverage is a matter of continually discussing what types of testing is needed for the work at hand, and understanding what’s already covered elsewhere.

A Practical Example

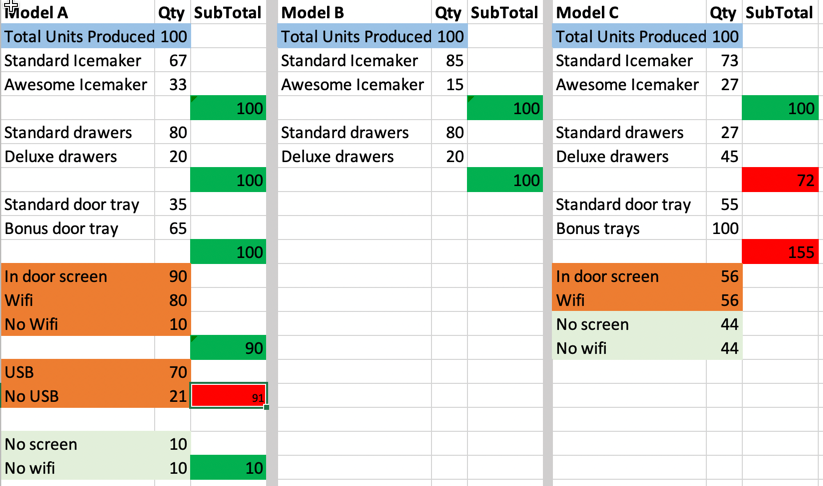

Now that the stage is set, let’s walk through a practical example, in this case, one drawn from a real-world project I worked on a few years ago. The project is a system for managing configurations of product lines. The notion is a manufacturer needs to create a matrix of specific models to build, and various configurations in those particular models.

In this example, I’ll use a line of several refrigerator models, each with various options and configurations. The matrix needs to be loaded from storage, various calculations run as part of the product owner’s inputs, then the results saved back to storage.

A rough example of the UI might look similar to an Excel spreadsheet:

Sample Product Management UI

Business-critical rules for the app might include totaling up configuration selections to make sure that subtotals match the overall “Total Units Produced”, and highlighting the cell in red when subtotals don’t match–either too many or too few. Other business-critical rules might include ensuring only authorized users may load or save the matrix.

With all this in mind, a practical distribution might look something like this:

Unit Tests (Only one model shown)

Model A 100 Total Units

- Standard Icemaker 67, Awesome 33, Subtotal == 100 no error (inner boundary)

- Standard Icemaker 68, Awesome 33, Subtotal == 101 Error (outer boundary)

- Standard Icemaker 67, Awesome 34, Subtotal == 101 Error (outer boundary)

- Standard drawers 80, Deluxe 20, Subtotal == 100 no error (inner boundary)

- Standard drawers 81, Deluxe 20, Subtotal == 101 Error (outer boundary)

- Standard drawers 80, Deluxe 21, Subtotal == 101 Error (outer boundary)

- Standard door tray 35, Bonus door tray 65, Subtotal == 100 no error (inner boundary)

- Standard door tray 36, Bonus door tray 65, Subtotal == 101 Error (outer boundary)

- Standard door tray 35, Bonus door tray 66, Subtotal == 101 Error (outer boundary)

- In-door screen sub-configuration, 90 units

- Wifi units 80, no wifi 10, subtotal == 90 no error (inner boundary)

- Wifi units 81, no wifi 10, subtotal == 91 Error (outer boundary)

- Wifi units 80, no wifi 11, subtotal == 91 Error (outer boundary)

- USB 70, no USB 20, subtotal == 90 no error (inner boundary)

- USB 71, no USB 20, subtotal == 91 Error (outer boundary)

- USB 70, no USB 21, subtotal == 91 Error (outer boundary)

- Screen 90 units, no screen 10 units, subtotal == 100 no error (inner boundary)

- In-door screen 90, no screen 11 units, subtotal == 101 Error (outer boundary)

- In-door screen 91, no screen 10 units, subtotal == 101 Error (outer boundary)

Integration Tests

The test run setup loads a baseline grid with valid values, then exxecute tests by invoking web services as an authorized and unauthorized user. Include basic CRUD operations (Create, Retrieve, Update, Delete).

Basic Create test

- Invoke “save” service call as an unauthorized user, web service returns expected HTTP error code (HTTP 403, eg)

- Invoke “save” service call as an authorized user, check the database and validate updated JSON was indeed saved

Basic Retrieve test

- Invoke “load” service call as an unauthorized user, web service returns expected HTTP error code (HTTP 403, eg)

- Invoke “load” service call as an authorized user, web service returns expected JSON based on baseline dataset

Basic Update test, using baseline dataset with a few updated values

- Invoke “save” or “update” service call as an unauthorized user, web service returns expected HTTP error code (HTTP 403, eg)

- Invoke “save” or “update” service call as an authorized user, check the database and validate updated JSON was indeed saved

Basic Delete test

- Invoke “delete” service call as an unauthorized user, web service returns expected HTTP error code (HTTP 403, eg)

- Invoke “delete” service call as an authorized user, check the database to ensure matrix/configuration was properly deleted

User Interface Tests

Check major operations, such as:

- Log on as an authorized user, ensure default data loads

- Edit one cell, save grid, check the database to ensure data was properly updated

Final Thoughts

My test list is frankly sketchy. I’ve had to use a somewhat contrived example, and I’ve left off plenty of test cases I might consider automating based on discussions with the team. I know some readers might have objections to the particular mix, and that’s OK — as long as you’re developing your own ideas of the sorts of test coverage you’d like to see!

Point being, use these examples as a starting point for evaluating how you’re mixing up your own automated testing. Work hard to push appropriate testing to the appropriate style of tests, and by all means, focus on keeping all your tests maintainable and high-value!

Jim is an Executive Consultant at Pillar Technology where he works with organizations trying to improve their software delivery process. He’s also the owner/principal of Guidepost Systems which lets him engage directly with struggling organizations. He has been in various corners of the IT world since joining the US Air Force in 1982. He’s spent time in LAN/WAN and server management roles in addition to many years helping teams and customers deliver great systems. Jim has worked with organizations ranging from startups to Fortune 10 companies to improve their delivery processes and ship better value to their customers. When not at work you might find Jim in the kitchen with a glass of wine, playing Xbox, hiking with his family, or banished to the garage while trying to practice his guitar.