This is a guest post by Peter G Walen.

Software Quality Assurance, Software Testing, and Quality Control. All the same, right? We hear people bandy the phrases about. We hear them interchangeably. Sometimes we find them pointedly at odds with each other. Which is the right term?

Instead of giving a single (singular) answer, let’s look at what they mean and maybe look at where they came from.

Origins

Quality Assurance and Quality Control are related. There are interesting ideas around them that may help us consider what the underlying meaning really is, as opposed to what we are often told they are. When done properly they are part of a quality system and quality management program.

Quality Assurance (QA) and Quality Control (QC) have similar origins. They come to us from manufacturing where variation in parts or components is a bad thing. Quality Control, in its initial incarnation and earliest references in the 1920s, looked to see if engineering requirements were met.

As manufacturing became more complex, the considerations around “quality” and QC likewise became more complex. A significant portion of this developed into a discipline for the controlling process and limiting variation within the process. The goal was to ensure the processes in use conformed to the defined process model.

In the 1950s, the idea of a quality “profession” had grown, still in manufacturing, to include a nascent set of QA and quality audit functions. These grew from industries where public health and safety were important, if not paramount. This led to an independent review and audit of the products.

The idea behind “assurance” is that assembled pieces selected at random meet very specific criteria. When differences are found, if they are within a determined range, then they may be accepted. If they are outside of the acceptable range, then there will likely be repercussions. These might include stopping the assembly process to test pieces more rigorously or scrapping the entire run and starting again.

The “assurance” of quality relies on the “control” of quality. If the defined process is followed to a fare-thee-well, then there is little to no room for variation. If there is little to no room for variation, then there is even less room for error.

This works well when building widgets and other physical objects. Applying the same concepts to software creation has some limitations.

Quality Control as defined by the American Society for Quality (ASQ) tells us to audit projects. The purpose is to make sure the proscribed process model is being followed. For software, each team can define their own process model. The challenge for auditors is to recognize that they can, and likely will be different, in order to meet the needs of the teams and their customers.

A “one size fits all” model usually results in a model that does not work for or help any team. An astute, experienced auditor will recognize this.

Quality

We have looked at the “assurance” and “control” parts of those terms. We have not looked at what is meant by “quality.” What is it that we are attempting to “control” or “assure”? Here, things get muddy.

There are oft-quoted references to “adherence to requirements.” Also, there can be the “absence of (known) bugs/defects.” Some will cite Philip Crosby or W Edwards Deming or Kaoru Ishikawa. Some circles of software people will cite the late, near-legendary Jerry Weinberg: “Quality is value to some person.” For others, the ultimate “authority” is Joseph Juran: “fitness for use.” Each of these gurus and thought leaders around “quality” has its own flavors and nuance.

Genichi Taguchi’s “Introduction to Offline Quality Control” touches on another aspect. He said, “Quality is the loss a product causes to society after being shipped… other than any losses caused by its intrinsic function.” This idea combined with Juran’s gives another, broader view. It might be stated that “Quality is fitness for use that does not cause broader harm.” This gives a nuanced definition that is important for software. It goes beyond a question of adherence to requirements. It looks at other broader requirements than a mere “Is it right?” This definition calls in a broader concept of what “right” might be.

Quality Audits and Inspections

There are a pair of concepts that often get ignored by most people and organizations. Without considering “quality,” quality programs, and the relationship between software testing and software quality, they are easy to overlook.

Inspections in the world of manufactured, physical products are examinations of product characteristics. That can include physical measurements and tests of one or more characteristics of the product. Is it the right size? Do components fit within established tolerances? Will it handle the weight it is intended to handle?

The questions considered have traditionally been applied to physical, manufactured things. Over the last 35 to 40 years, these ideas are being applied to service products, as well as manufactured products. Are response times for questions within a given time frame? Are customer problems addressed and resolved as quickly as possible? Is the customer kept informed of the progress of the work and the likelihood of a resolution?

Quality audits may look very much like inspections. The prime difference is that while an audit may include some aspects of an inspection, the purpose is not to verify the product itself. An audit should evaluate the way the product is made.

The purpose of the audit is to look at how closely the defined process model is being followed. Are there variations? Then the reason and need for the variations are examined. The goal is to continually improve how work is done thereby improving the product itself. It does not matter if the “product” is a physical item, a service, or, for our purposes, software.

One other aspect of a quality audit must be considered. Not only should an audit look at the process of making the product. It should also look at and review the process of inspecting the product.

With these ideas examined, let us now consider the elephant in the room most people try and ignore or excuse as “everyone knows what we mean by that.”

Testing

One of the common views of testing, which many consider the “real” or “correct” view, is software testing validates behavior. Tests “pass” or “fail” based on documented requirements. Software testing confirms those requirements.

But is that all that software testing does? Is testing to verify adherence to requirements the only purpose for software testing? Does this mean that testing software is the same as using a digital micrometer for a manufactured product or component? The central issue lies around what software testing is and is supposed to be.

A common definition of software testing might run along the lines of “an activity to check whether the actual results match the expected results and ensure the software is defect-free.” Along with that, certain classic measures tend to show completeness and validity of “pass/fail” results.

These often include:

- percentage of code or function coverage

- percentage of automated vs. manual tests

- number of test cases run

- number of passing and failing test cases

- number of bugs found and fixed

I recognize that in some circumstances there are contractual obligations that define what the “deliverables” are for software testing. In my experience, this is an extremely narrow and limited scope for testing. It does little more than provide a level of protection in case of a lawsuit when the software project fails to launch, or there are significant defects found after release. My concerns are that, while these measures may shed some light on testing, they tell nothing of the efficacy of the testing or the quality of the software except by inference.

It is interesting to me that when I have raised these questions, advocates of testing as confirmation usually respond that the question of the “software quality” is not a concern of “testing.” Thus, testing is independent of the concerns of overall software quality.

Does this make sense? It does not to me, nor does it feel right. Perhaps it is because of the definition of “testing” that is needed to accept this as a condition. What if we looked at other definitions of what testing is.

Cem Kaner, J.D., Ph.D., uses a different definition of testing. Kaner calls testing “an empirical, technical investigation conducted to provide stakeholders with information about the quality of the product or service under test.”

Using this, we see testing as fact-based (empirical). It uses appropriate tools (technical). It provides people who need it (stakeholders) with relevant information about the software (investigation).

When people ask me what testing is, the working definition I have used for several years is:

Software testing is a systematic evaluation of the behavior of a piece of software, based on some model.

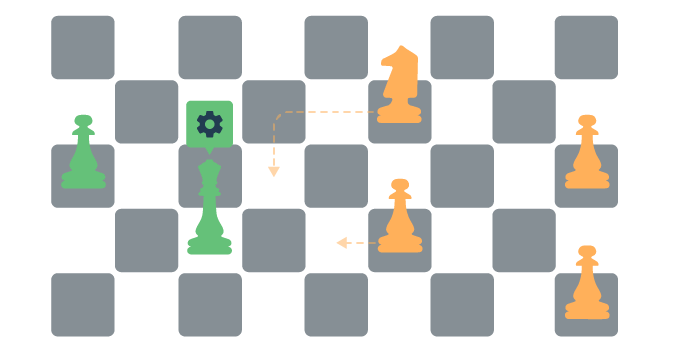

Using models relevant to the project, we can select appropriate methods and techniques in place of relying on organizational comfort-zones. If one model we use is “conformance to documented requirements” we exercise the software one way. If we are interested in aspects of performance or load capacity, we’ll exercise the software in another way.

Thought models can help us direct focus and shape thinking. If looking through a specific lens, testers may notice one set of items. Shift the lens (model) and the tester may notice other items.

There may be a contract limitation to what view, model, or models, can be used for a given project, but there is no rule limiting testers to use a single model. Most software projects will need many thought models to be used in testing.

Testing as Inspections and Audits

Good testing takes disciplined, thoughtful work. Exercising the documented requirements is one form. The challenge often comes in how to exercise the requirements. Following specific steps is also a form of testing. This might be to determine cases to be used for a public demo or to recreate a specific problem scenario. Looking for performance characteristics or the ability to handle various loads are also testing. Each of those is a good example of “testing as inspection.” We are examining the software after it has been created.

Good testing requires communication. Real communication is not documents being emailed back and forth. Communication is bi-directional and conversational. It is not a lecture or a monologue. Conversation is required to make sure all parties are in alignment.

Where the alignment often fails is the reason or purpose behind the project. What need is being filled or what problem is being addressed? Good testing looks at the reason behind the project, the change that is intended to be seen. Good testing looks to understand the impact within the system to the system itself and to the people using the software.

By itself, testing does not assure anything. Good testing challenges assurances. It investigates possibilities and asks questions about what is discovered. While most people apply this to the software after it is created, it can be used far more effectively to evaluate the understanding of requirements, the applicability of the design and to consider underlying assumptions and presumptions. These are examples of software testing as quality audit.

Good software testing speaks to the impact on the system as it exists. Software testing serves the stakeholders of the project by being in service to them. The ultimate stakeholders for software projects are the customers and end-users of the product. The primary goal of a Quality Management System is maximizing customer satisfaction.

Given that software testing takes the place of physical inspections and mechanical exercises for the produced or manufactured goods, testing cannot be differentiated from its function in a quality program.

Testing is a significant part of a considered quality management system. Testing can play a role. There is no way to genuinely differentiate between them. It is disingenuous to consider that software testers and software testing are not key to the quality “business.” Testing is the quality business.

References: Kaner, Falk & Nguyen, Testing Computer Software, 2nd Edition, Wiley, 1999 Charles Mill, The Quality Audit: A Management Evaluation Tool, MCGraw-Hill, 1988 J.P. Russel (ed.), The ASQ Auditing Handbook, 4th Edition, ASQ Audit Division, 2012 Philip B. Crosby, Quality is Free, McGraw-Hill, 1979 W. Edwards Deming, Out of the Crisis, MIT, Center for Advanced Engineering Study, 1988 Joseph M Juran, Juran’s Quality Control Handbook, 4th Edition, McGraw-Hill, 1988 Taguchi & Wu, Introduction to Offline Quality Control, Central Japan Quality Control, Assn, 1979

Peter G. Walen has over 25 years of experience in software development, testing, and agile practices. He works hard to help teams understand how their software works and interacts with other software and the people using it. He is a member of the Agile Alliance, the Scrum Alliance and the American Society for Quality (ASQ) and an active participant in software meetups and frequent conference speaker.