This is a guest post by Matthew Heusser.

Today I will give a few examples of cases that are not big enough to warrant a full-time performance tester, where “traditional” “functional” testers and test groups stepped up to do the work. I’ll show you what I did, teach you how to do it, and, along the way, explain the difference between performance testing and performance engineering.

Let’s get started.

Drowning in data

Early on in my time at Socialtext we released a new module developed with an entirely new back-end in python. The testers didn’t know much about the system; we certainly did not know how it would perform. We took this concern to the CEO, who was also our acting Vice President of Engineering, along with how long it would take to do from-scratch load testing. Our CEO wanted to get to production, so he told us to skip it.

A week later I’m sitting in a team retrospective, and the question comes up. “Why didn’t QA find the performance problems?” The CEO of the company answers “Matt brought the potential problems to my attention. He suggested some options. I decided to take a calculated risk and skip performance testing. What’s next?”

That took the heat off for a week or two, but the questions started quickly: What was QA going to do for performance testing?

The short answer is, we didn’t need to. We didn’t need to test the software to find out what was slow. We knew it was slow. We even had data — metrics — on how long each unique URL “page” took to load. These metrics were sortable, with data on the mean, median, quartiles, slowest 10% average, slowest 1% average, and so on. This wasn’t a performance testing problem, but a performance fixing problem.

That kind of argument, strictly speaking, is true. Testing designs the scenarios, runs the test, and provides that data that saving a page takes six seconds under production load. Brush off your hands and get to the next work.

Performance Engineering actually tries to identify where the weak points are in the system and how to reinforce them. The classic ways to do that are with monitoring and profiling, which I’ll cover next.

Database dichotomy

Another time the work was for an insurance company. As you’d guess, it was a lot of database work, adding new customers to the system, processing adds and removals in coverage, inputting and processing claims. The ERP system did a lot of this work; the programmers automated getting the data in, the data out, and “shaking all about.” (That is the website, the data warehouse, and the customizing.)

As the “test guy” I was pulled in to help a data extract that took four hours to run, and was getting slower. If it continued its growth, it would have to run overnight, then it would run into the batch window and the database connections would start to time out. We had perhaps a month to fix it without problems, two without serious problems. This is an easy performance test: It takes too long. Done. The engineering took a little more work.

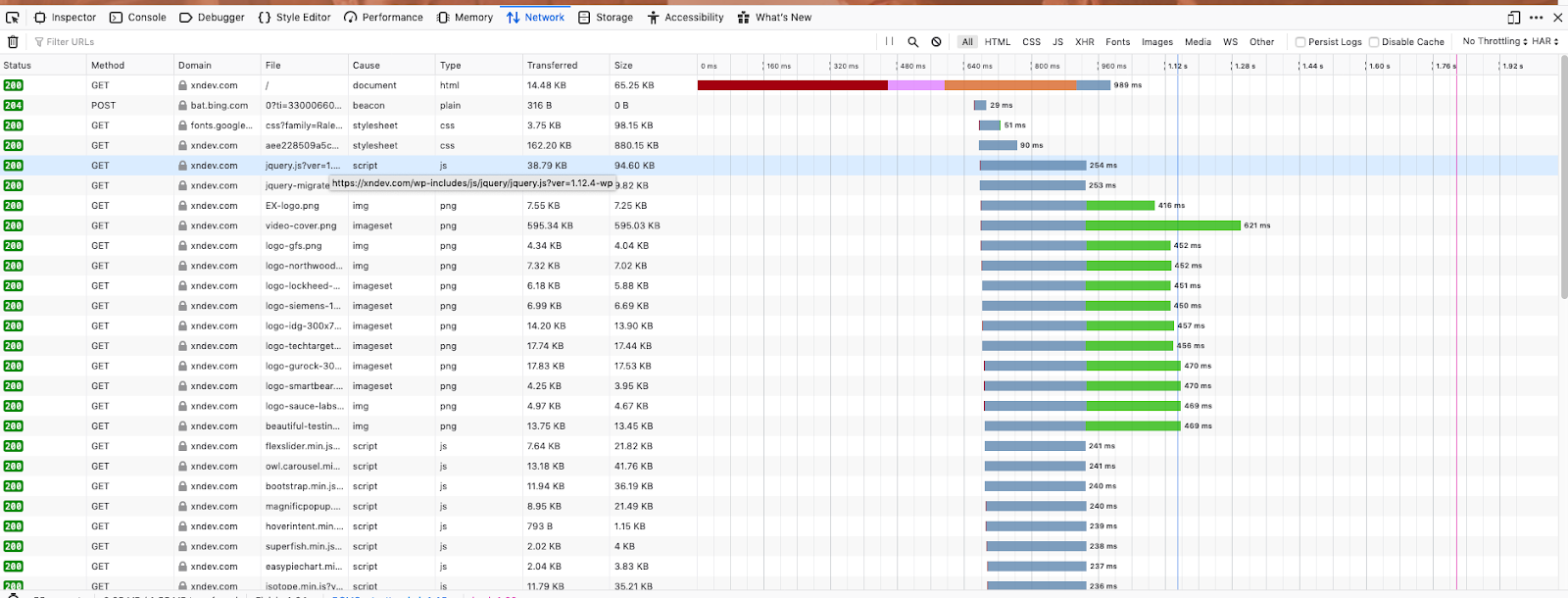

Profiling breaks the entire transaction down into the smallest possible pieces. For a website, that might be the time to render, the delay between servers, and the time on the server. Once you know the time on the server, you can profile the application to see how much time it spends on each line of code. Here’s a simple waterfall diagram I got from using Firefox, using (T)ools->(D)eveloper->(N)etwork, and loading my website, xndev.com. The page loads and renders in 2 seconds without an error – but I do want to look at that outer HTML.

For the database dilemma, I profiled the code, written in Perl using Devel:Profiler. Most popular tools have a program like this. When the program finished it spit out results that told me how much time was spent in which methods, and how often they were called. The program spent an amazing amount of time on a line that ran a select statement. The statement wasn’t too slow, it only took a few seconds – but the statement was called tens of thousands of times.

The programmer wrote the program like this:

Select every customer_id that qualifies For each customer_id Select all customer data where id=customer_id Export customer data Next

I rewrote it like this:

Select all customer data that qualifies into one query result For each customer_id Export customer data Next

Suddenly those hours disappeared and the application ran in 45 minutes. That is a long time to keep a database record open, so I added another step, pulling the data into an in-memory data structure, closing the database connection, and then running the for loop. This dropped the time to fifteen minutes.

That made the application not only performant, but testable. We could break it into two pieces: the data-gatherer piece and the exporter piece.

Where’s the budget?

What’s interesting about the engineered approach is there is no budget for it. No “performance engineer” role. You don’t have to buy new tools, hire a consultant, or have a “methodology” conversion. Often the work can be done in discretionary time without impacting any project schedule.

Instead, people need to focus on the issue and collaborate to solve problems.

The example above was a simple system; the code itself was the problem. I didn’t have to try to back-monitor to figure out if the bottleneck was CPU, disk, memory, or network, then figure out how to fix it. Often when I find performance problems in these “marginal” cases, the problems themselves really are just that simple. Someone just has to dive in and get the data. Going from performance testing to performance engineering is a great way to blur the lines between roles, add value, and prevent the “QA is dead weight” cliche.

Give it a try.

Matthew Heusser is the Managing Director of Excelon Development, with expertise in project management, development, writing, and systems improvement. And yes, he does software testing too.