This is a guest post by Bob Reselman. Last updated July 2021.

Event-driven application architectures make it so that various components in a very large distributed system can communicate and interoperate asynchronously. Event-driven architectures are the glue that binds the various services and components that make up the system together.

Passing messages between components using a common message broker such as Kafka or RabbitMQ makes it possible for components to operate independently at scale. It’s not unusual for systems that support an event-driven architecture to process more than 20,000 messages a second.

In order to design tests at this level of scale, test practitioners need to have a good understanding of the dynamics of event-driven application architectures. Here’s how to design tests that ensure the various components within the architecture perform as expected at scale.

Understanding Synchronous vs. Event-Driven Applications

Before going into the specifics of describing a general approach to testing event-driven application architectures, it’s useful to understand what an event-driven application architecture is.

Basically, an event-driven application architecture is one in which services (aka functions) within an application input data and output data via messages that are stored in a message queue. This differs from a synchronous architecture, in which data is passed straight to a service by making a direct call.

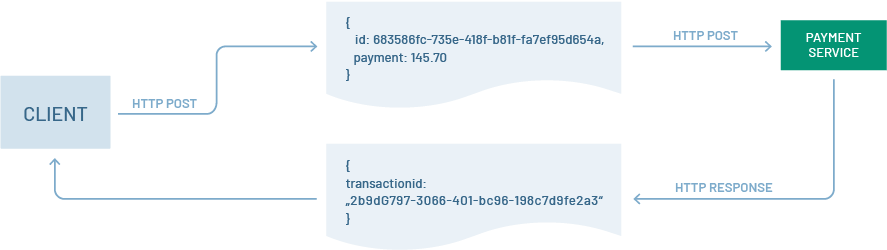

The canonical example of a synchronous interaction is the standard request-response communication to and from a web server. A caller makes a request to a web server and then waits to receive a response. The caller is “locked” in the interaction until a response is returned. The response might take a millisecond, or it might take a few seconds, but the caller can do nothing until the interaction completes.

Figure 1 below illustrates a synchronous interaction.

Figure 1: A synchronous transaction

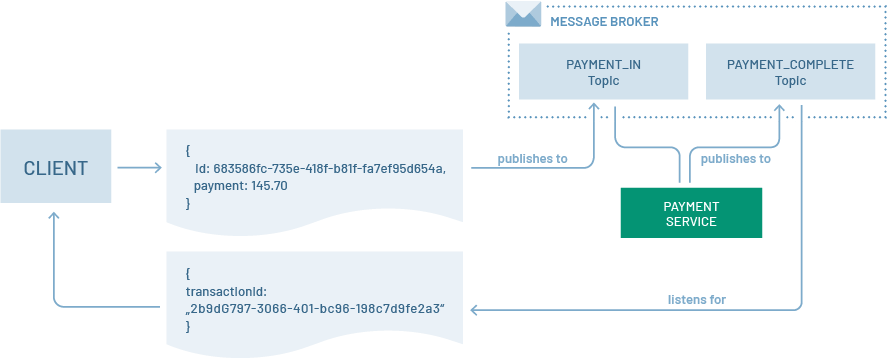

Figure 2 below illustrates the concept

Figure 2: An event-driven asynchronous transaction

The benefit of an event-driven application architecture is that there is no blocking between processes. The client doesn’t have to wait around for a response. All that’s required is to listen for the response at a predefined source on the message broker.

Event-driven architectures are well suited for applications such as high-speed trading on a stock exchange, or even for ride-share programs. For example, instead of your cell phone having to keep asking the ride-share server where the car is via an ongoing series of HTTP requests and responses, the client application wires into a topic on a message broker and receives messages containing location information as the car moves along.

While event-driven architectures have benefits, they can be a challenge to test. In terms of performance testing, it’s not simply a matter of measuring the time between a request and response. A message can go out and the follow-up message will be available at any time.

Also, network input and output to and from the broker, the efficiency of the broker, the efficiency of the algorithms processing the event data, and even the structure of the event messages can affect the system performance. There are many factors to consider.

Nonetheless, event-driven applications must be tested in as thorough a manner as is reasonable. The first place to start is with logging.

Logging Is Everything

Effective logging is critical in any application architecture, but when it comes to event-driven application architecture, it’s essential.

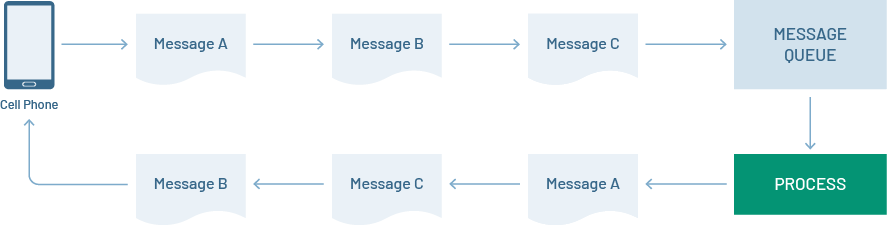

Remember, in an event-driven application architecture, messages are coming and going at near-instantaneous velocities. And, in many cases, the order is not guaranteed. For example, messages A, B and C could fire in sequence to a message queue, and yet the processor could emit the processed message in the order B, C and A, for example, as shown below in figure 3.

Figure 3: Message order is not necessarily guaranteed in an event-driven application architecture

Thus, the only way to really figure out what’s going on in terms of application behavior is to make sure that all information relevant to application performance is being logged, with timestamping being the no-brainer requirement.

Also, there needs to be a log aggregation framework in place to collect log information from all sources. Examples of such frameworks are Fluentd and Logstash. If you’re using a cloud provider, there are products such as Amazon CloudWatch or Google Cloud Stackdriver. Not only is log aggregation important, but you’ll also need a way to analyze and view the log information once it’s gathered. Then you can use a product such as Kibana, Splunk or Grafana.

No matter what direction you go, the important thing to remember is that in order to get the data generated when testing an event-driven application, you need comprehensive logging to capture the information and an aggregation framework to collect it.

This is where distributed tracing comes into play.

Distributed Tracing Is Essential

Given the asynchronous nature of an event-driven application architecture, it’s really hard to get a clear idea of the logical execution path of a given workflow.

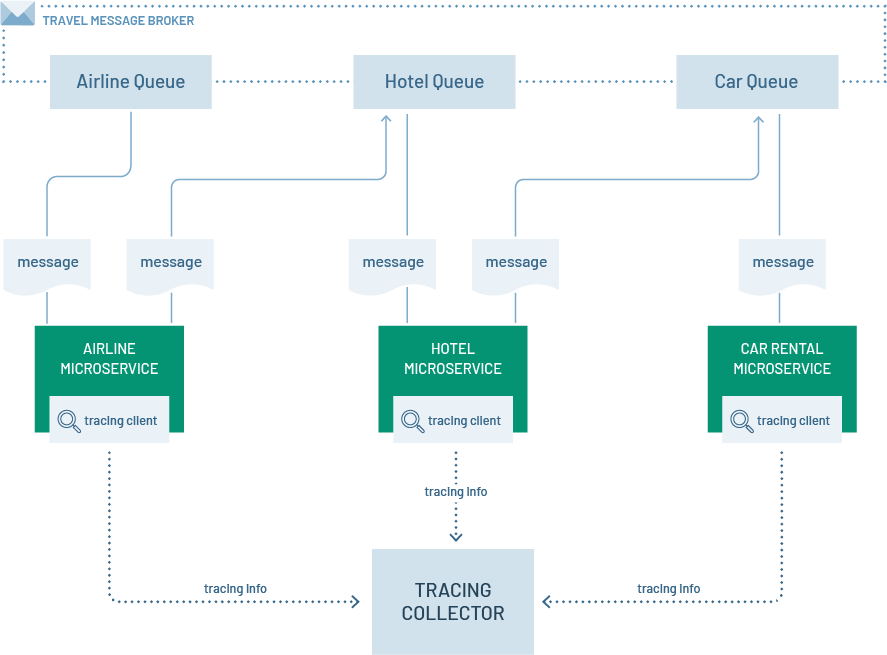

For example, figure 4 below shows an event-driven application architecture for a travel reservation system. Messages pass to and fro among various queues to get an airline ticket, reserve a hotel room and then rent a car. While there is a variety of messages being generated asynchronously among the services, there is a logical sequence in play that makes up the travel transaction — airline ticket, hotel reservation and car rental.

Figure 4: Distributed tracing provides a clear view of a message sequence in a distributed environment

Yet, if any message can happen at any time, how do we understand what is happening in the entirety of interactions? This is where distributed tracing comes in.

Distributed tracing is designed to monitor the complete activity path of a transaction throughout the entire system. A tracing client is installed on each computing artifact that hosts a service. The client monitors activity and sends back information organized to a particular workflow instance.

The tracing tool has the smarts to keep track of everything on an instance-by-instance basis. Most modern tracing tools can handle short execution times — a few seconds, for example — as well as long execution times on the order of a week.

The important thing to understand is that a distributed tracing tool allows test practitioners to gather metrics about transactions that involve multiple events and messages. There are now a number of distributed tracing products and projects that support distributed tracing in an asynchronous environment, such as Wavefront and Jaeger.

Distributed tracing is a big deal because in the past, asynchronous interactions were very black-box. Now, by using a distributed tracing tool, such interactions are visible. This is critical when it comes to doing enterprise-level testing.

So, then the question becomes, how does one go about testing event-driven application architectures?

The Testing Hierarchy Still Holds

The biggest difference between testing synchronous applications and those that are based in event-driven architectures is having to accommodate the asynchronous nature of the interactions. Developers still have to unit test. Integration testing still needs to be done, as does performance and deployment testing.

Creating unit tests for asynchronous functions requires a different approach to test design. In an asynchronous environment, calls happen in response to a message. The message needs to be published to a message queue, pulled by a service and then processed. Then the result needs to be published to yet another queue to an interested party. It’s no longer simply a matter of throwing some code into memory and calling the function; an entire messaging architecture needs to be operational in order to conduct a single unit test.

In an event-driven application architecture, the developer is going to have to do a lot more work. But this work can be reduced by creating an automation process that scaffolds up the unit testing environment. Once the process is automated, it can be shared with each developer in the team to meet their individual needs.

Higher-level testing will also require using automation to provision the messaging infrastructure. And aggregated logging and distributed tracing need to be part of the messaging infrastructure; otherwise, test practitioners will have no way to observe and measure the results of their efforts. The logging and tracing facilities also will need to accommodate system monitoring as well as application monitoring. As mentioned earlier, in an event-driven application architecture, millions of messages will be moving around the system at near-instantaneous speeds. One bad network router or slow disk drive can have as much impact on application performance as an inefficient data retrieval algorithm.

The physical environment counts. Hardware events will need to be correlated to the application events that are reflected in the aggregated logs and distributed traces.

In Conclusion

Event-driven application architecture is becoming more popular, particularly as a single event within the given application becomes interesting to a larger variety of consuming services. For example, the data generated by a single click on a web page might be absorbed by a variety of services, each with a distinct purpose, from heatmap reporting to user profile analysis to purchase fulfillment.

Event-driven application architecture provides a lot of power. But it also creates challenges for test practitioners. However, the good news is that these challenges are well-known. Companies that are successful in testing event-driven application architecture understand the asynchronous nature of the paradigm. They weave aggregated logging and distributed tracing into every level of test activity, from unit testing to system-wide performance testing.

As event-driven applications grow in popularity, we’re going to see more need for test practitioners to adopt testing methodologies that support asynchronous communication between services. It will be a challenging undertaking, but it’s one worth taking because meeting big challenges produces great software. And making great software is what it’s all about. Try TestRail for free today!