This is a guest post by Matthew Heusser.

One way to look at a test plan is as a collection of risks work managing. This checklist provides ideas for what those risks might be and how to handle them, along with what to call out of scope.

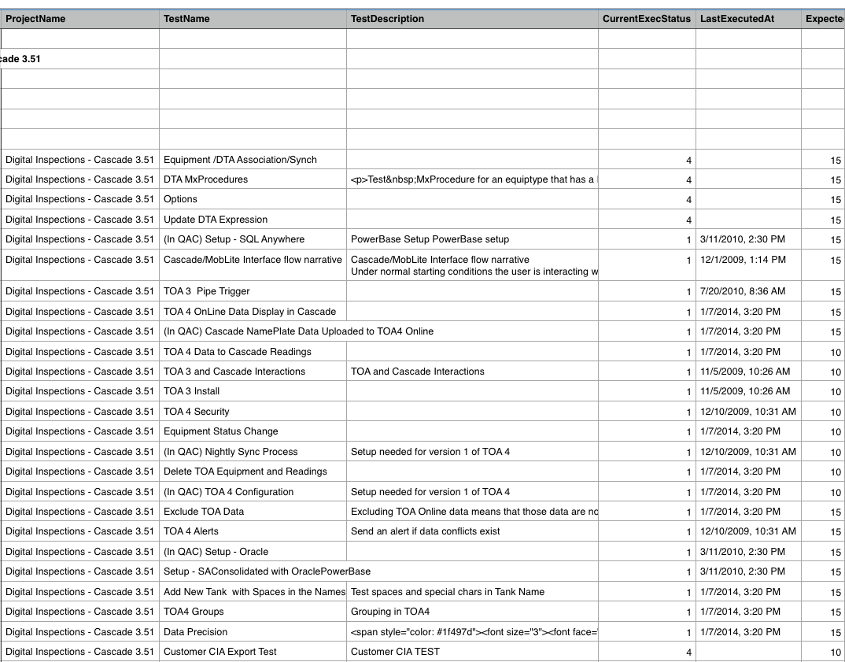

The best approach is probably to review the checklist before creating the plan, then create the plan, and then go back and use this Ultimate Test Planning Spreadsheet to decide whether the elements on the checklist are (Y)es, done, (N)o, not done, (O)ut of scope or (I)irrelevant.

We aimed to make a checklist that hits the sweet spot of good enough, enough of the time, for enough people because a comprehensive list would be overwhelming. What you see below tries to balance those concerns based on reviews from experts with decades of experience.

Once you have used the checklist once or twice, you may find that some elements, like usability or security, are always out of scope, or some of the test design approaches are favorites. The good thing about this tool is that it’s customizable. Feel free to create your own template and add or delete rows, and then come back here and leave comments about how you’ve customized the template. We’d love to hear from you!

Approaches to consider for test design

Feature list. Build a feature list and make the features into test cases. Sometimes called a traceability matrix, this can show holes in coverage and, sometimes, features that don’t need further work.

User journey map. Instead of listing features, consider an entire flow of user behavior, from check-in to check-out. One common e-commerce term for this is “the path to purchase.” Some features that do not show up in any user journey might warrant less testing and less future development, especially if they are not popular in the logs.

Log mining. Organize log entries by feature, and sort to find the features that have the heaviest use; focus test design time on those core features.

Exception conditions. These are tests for when things go wrong: The database is down, the website is declined, the API does not return for so long that the browser times out. Quick attacks can overlap with this category.

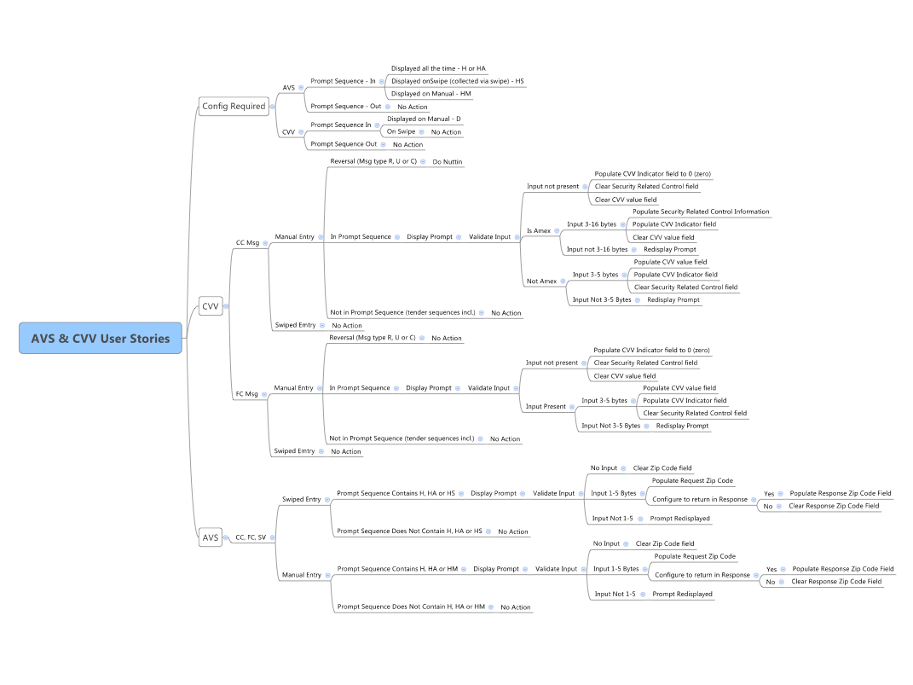

SFDIPOT. Pronounced “San Francisco Depot,” the mnemonic stands for structure, function, data, interfaces, platform, operations, and time. One possible exercise for test planning is to list these as nodes, then create sub-notes for the risks related to these elements of the software. Once complete, review that list of risks with the test plan so far to make sure those risks are covered. In a large program, there may be one SFDIPOT diagram per major feature or subsystem.

Other heuristic test strategies. James Bach’s Heuristic Test Strategy (sometimes jokingly spelled “Heusseristic test strategy”), which defined SFDIPOT, is a treasure trove of considerations for understanding the testing mission, the product goal, and the quality objectives. Use it to come up with entirely new test approaches, and then review the plan to see if they are included.

Domain-based testing. Where quick attacks and exceptions require no knowledge of the software, a domain approach recognizes the different potential conditions and tries to find relevant and powerful tests for as many conditions that make sense. Domain testing requires a careful analysis of the requirements; decision tables are an example of domain-based testing. Simply put, when the requirements create a “wall of text” that implies more than a dozen test ideas, consider visualization as an intermediate step before finalizing the test plan.

A decision tree from which detailed test cases can derive

RCRCRC. This mnemonic developed by Karen Johnson stands for recent, core, risky, configuration-sensitive, repaired, and chronic. Considering those elements can allow the team to find the highest priority areas for retesting, especially for regression.

Planning

Risk and priority. Advocating for risk, impact, and priority allows testers to test the most important pieces first. This can provide crucial information to the programmers and actually accelerate delivery. Likewise, if the project is under any schedule pressure, a detailed plan creates a dilemma: Should the testing for the project be late, or should testing be incomplete? Plans that provide priority allow teams to make that decision a more educated one, with less risk on the table.

Structure. The test plan should address who will be doing the testing at what places, and how that work will be tracked. If it is tracked on an electronic tracking tool and people sign up for work in a self-organized fashion, that is fine; just document it. If the team always uses the same approach and project documents are repetitive, consider creating standards for the team, so plans only need to address what is different. Structure can also include how the work is divided — by feature, risk, charter, story, on a spreadsheet, in a test case management tool, or by some other mechanism.

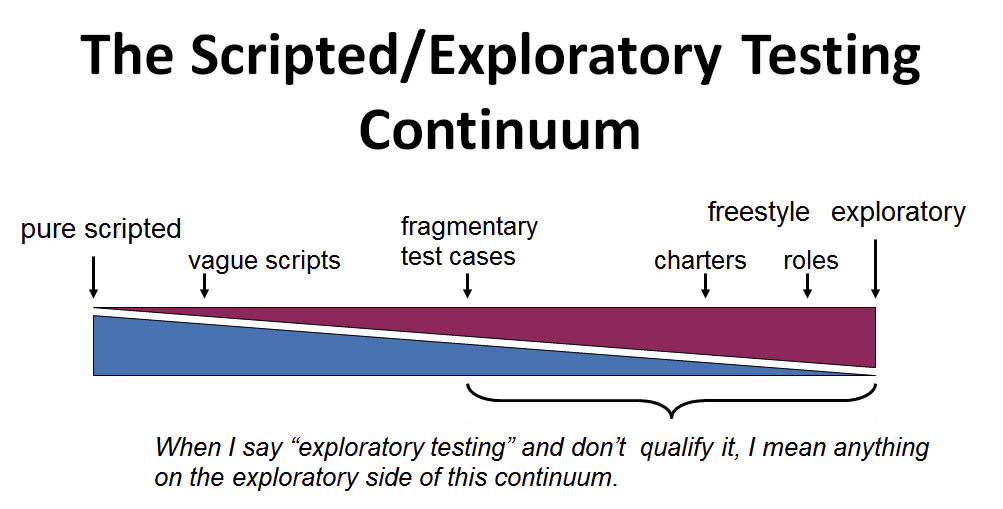

Human creativity. A test plan should elaborate on what level of creativity the testers will execute. This can vary by tester skill level and feature. For example, a test plan might call for each feature to be tested in a precise way, yet only document the user journey at a high level, allocating a timebox of two hours for testers to test each major flow. James Bach’s exploratory testing continuum (used with permission) visualizes this difference:

Reporting. How people will demonstrate progress or blockages could slow down or possibly accelerate the project. Some modern tools automatically update web pages as people accomplish their work. Reporting should include what metrics are reported, and when.

Timing. A plan implies some aspect of when things will happen. This includes activities around developing a feature and just before a release, which can be anything from “shakedown” testing to a detailed regression of the entire system.

Tooling and automation. What risks will be covered by automation, and what risks will be measured by hand? Answering these questions can include considerations for CI/CD, staffing, resources, and time constraints, and it can also guide people to decide where to inject tooling and automation.

Interacting with other roles. Traditionally, developer testing and unit testing are not included in test planning, but modern test case management tools can make that visible. How bugs are reported, handled, handed off, and so on should be in a test plan or team standard.

Tests other than features

Accessibility. It can be easy to fail to plan features for people who need help using devices. Having a test planning checklist can help remind us to make sure every image has descriptive text, or it is possible to read and use a website with only a keyboard. Adding this functionality will tend to make software easier to automate and navigate as well.

Internationalization and localization. If the software needs to support other languages, that is something to test for. Even if the software is English-only, if names are stored, you can run into French and Spanish characters easily. Testing for them can be done in five minutes — if someone lists the test on the plan.

Security. Code scanning, vulnerability scanning, and penetration testing are three considerations for any modern website.

Performance and load. Modern users expect a page load within two seconds, even if they are one of the thousands of people using the software. It is hard to think of this as “nonfunctional” testing if the software doesn’t function under load.

Usability. Many of us have seen software that met all the requirements yet was so painful to use that we rejected it. If testers are empowered to provide this feedback, that aspect should be documented on the test plan.

Finally, don’t forget what you forgot

Known (and unknown) unknowns. External dependencies that might not deliver, standards that are changing, systems that are not documented, and other things can go bump in the night. If you list these risks on the plan, at least you can start a conversation — even if you have no plan for them.

Out of scope. It is common for a test plan to view security, usability, accessibility, and even load testing as “out of scope.” If the plan lacks priority, then a response to schedule pressure when excessive defects delay the project could be out of the scope of the plan. Making these explicit in the plan allows management to take these risks intentionally, or to make sure they are accounted for in other ways.

Don’t forget to check out The Ultimate Software Test Planning Checklist to help you identify risks, learn how to handle them properly, and ultimately, decide whether the elements on the checklist are “done”, “not done”, “out of scope”, or “Irrelevant”.